Artificial intelligence is no longer confined to tech labs or science fiction. Today, it is embedded in everyday business processes — and nowhere is that more visible than in recruitment. From drafting inclusive job postings to screening resumes and analysing video interviews, AI-powered tools now influence whether a candidate gets noticed, gets an interview, or gets rejected before a human even looks at their application.

When I began my research into AI and recruitment, my goal was simple: to understand whether these technologies help or harm the push for fairness, diversity, and inclusion in hiring. What I discovered is that while AI offers efficiency and scale, it also carries serious risks of reinforcing existing inequalities — often in subtle, invisible ways.

In this article, I want to share some of the key insights from my research paper, Searching for Bias within AI-Powered Tools Used in the Recruitment Process. My hope is that by unpacking how these systems work (and sometimes fail), we can all start asking better questions about the future of hiring.

Why AI in Recruitment?

Companies are under pressure. The volume of applications for open positions is overwhelming, hiring cycles are long, and the demand for diverse and inclusive workplaces is higher than ever. AI seems like the perfect solution:

- It promises speed through automation.

- It claims to reduce unconscious bias by removing human subjectivity.

- It offers standardised processes and lower costs.

In fact, a 2019 survey found that 88% of global companies were already using AI in HR in some form, and this number continues to grow. But as I dug deeper, I saw a troubling pattern: AI doesn’t operate in a vacuum. It learns from historical data — and history isn’t neutral.

Where Bias Creeps In

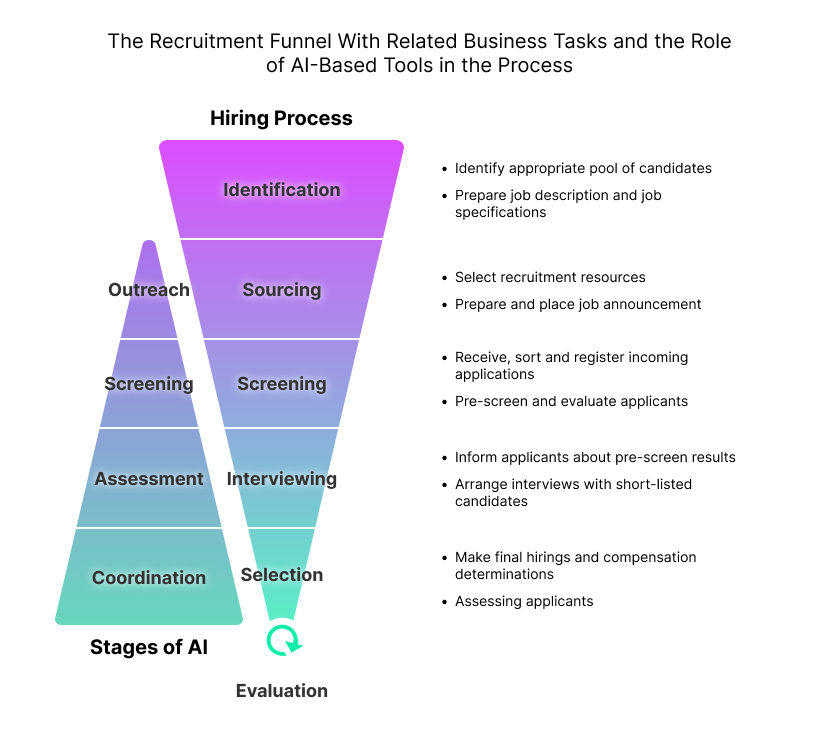

Bias in AI recruitment isn’t always obvious. It doesn’t have to be a line of code that says “prefer men over women.” Instead, it’s often hidden in the data, the training methods, or even in how algorithms are deployed. Let’s look at three critical stages of recruitment where bias emerges.

1. Outreach — Who Sees the Job Posting?

Job postings are no longer just put on a notice board. They’re optimised and targeted by algorithms:

- Studies show that gendered language in job ads can discourage applicants, especially women. Although discrimination is legally prohibited, subtle biases remain through masculine or feminine wording. Tools like Textio help counter this by analyzing job descriptions with a Gender Tone Meter and suggesting more inclusive language. This not only broadens the applicant pool but also promotes diversity. While not perfect, such AI tools encourage employers to write more equitable and welcoming job ads.

- Beyond job descriptions, the choice of online platforms also shapes recruitment. Tools like job boards, social media, and search engines allow companies to micro-target candidates based on factors such as age, gender, interests, and job history. Platforms like LinkedIn use user data and audience expansion features to reach specific groups, but this also means not everyone sees the same job postings. Since advertising space is limited and often paid, social media platforms play a major role in deciding who gets access to these opportunities.

- Facebook’s ad-delivery system uses user profiles and historical data to decide who sees job postings, but this can unintentionally lead to discrimination. Studies show that certain jobs were disproportionately shown to men and white users, reflecting existing labor market biases. Although not always intended by advertisers, such skewed targeting can violate equal employment laws. Follow-up research confirmed Facebook still displayed jobs differently to men and women, while LinkedIn’s system showed no such bias.

- Job-matching platforms like ZipRecruiter use recommendation systems to connect candidates with suitable jobs through content-based filtering (based on individual actions) and collaborative filtering (based on similar users’ behaviour). While these systems aim to reduce bias, they can unintentionally reinforce stereotypes. For example, if women primarily explore lower-paying jobs, algorithms may continue recommending only such roles, limiting opportunities. Collaborative filtering can also spread subgroup biases across users. Even without explicit gender or ethnicity data, unconscious bias often enters through user behaviour patterns. Research shows men tend to apply more boldly, which algorithms may interpret as higher suitability, disadvantaging women. LinkedIn addressed this by ensuring balanced exposure—showing jobs to equal proportions of men and women—helping avoid skewed results.

This means that before anyone applies, AI is already shaping who gets to participate in the opportunity pool.

2. Screening — Who Gets Past the First Gate?

Once applications are submitted, resume screeners and chatbots take over:

- CVViZ uses NLP and machine learning to screen resumes beyond keyword matching, ranking candidates by experience and requirements. However, such systems risk reinforcing bias by replicating existing patterns, leading to homogeneity in hiring. Research shows that when trained on real-world data, these algorithms can adopt discriminatory biases—for example, devaluing Afro-American names and associating female names with housework rather than professional or technical roles.

- In 2015, Amazon’s AI recruiting tool was found to favour men for technical roles because it was trained on a decade of male-dominated hiring data. The system even showed odd biases, such as prioritising applicants named “Jared” who played lacrosse. Such cases highlight how NLP tools can replicate existing inequalities, disadvantaging minorities and candidates with different cultural or linguistic backgrounds. To prevent this, hiring chatbots and AI systems must be improved to avoid reinforcing social biases.

- Mya is a recruiting chatbot that engages with candidates before formal applications by asking screening questions, interpreting responses, and suggesting suitable jobs. Using NLP and decision trees, it simulates authentic conversations. Developed in 2012 by Eyal Grayevsky and James Maddox, Mya was later acquired by StepStone.

3. Assessment — Who Gets to Prove Themselves?

Here’s where things get even more concerning. AI-powered assessment tools analyse candidates’ speech, facial expressions, and even micro-behaviours during video interviews:

- Pymetrics uses neuroscientific games to assess about 90 cognitive, emotional, and social traits, comparing applicants’ results to those of a company’s top performers. While this aims to identify strong candidates, it risks bias if training data reflects demographic imbalances or subjective definitions of “top performance.” To counter this, Pymetrics developed Audit AI, an open-source tool that detects and reduces bias in predictions. Still, the approach may exclude equally capable candidates who don’t share the same traits as existing high performers, especially if training data quality is limited.

- HireVue uses AI to evaluate video interviews by analysing speech, tone, facial expressions, and word choice, generating an “Insight Score” to rank candidates. While designed to standardise and speed up hiring, critics argue it risks bias and unfair rejections. Although the company claims to test and adjust models to avoid discrimination, the Electronic Privacy Information Center (EPIC) filed a complaint in 2019, calling the system biased and unprovable. In response, HireVue dropped facial recognition in 2021, focusing instead on language analysis and chat-based assessments. Still, research shows these tools can be error-prone, especially with non-native speakers and people of color, raising ongoing concerns about fairness and accuracy.

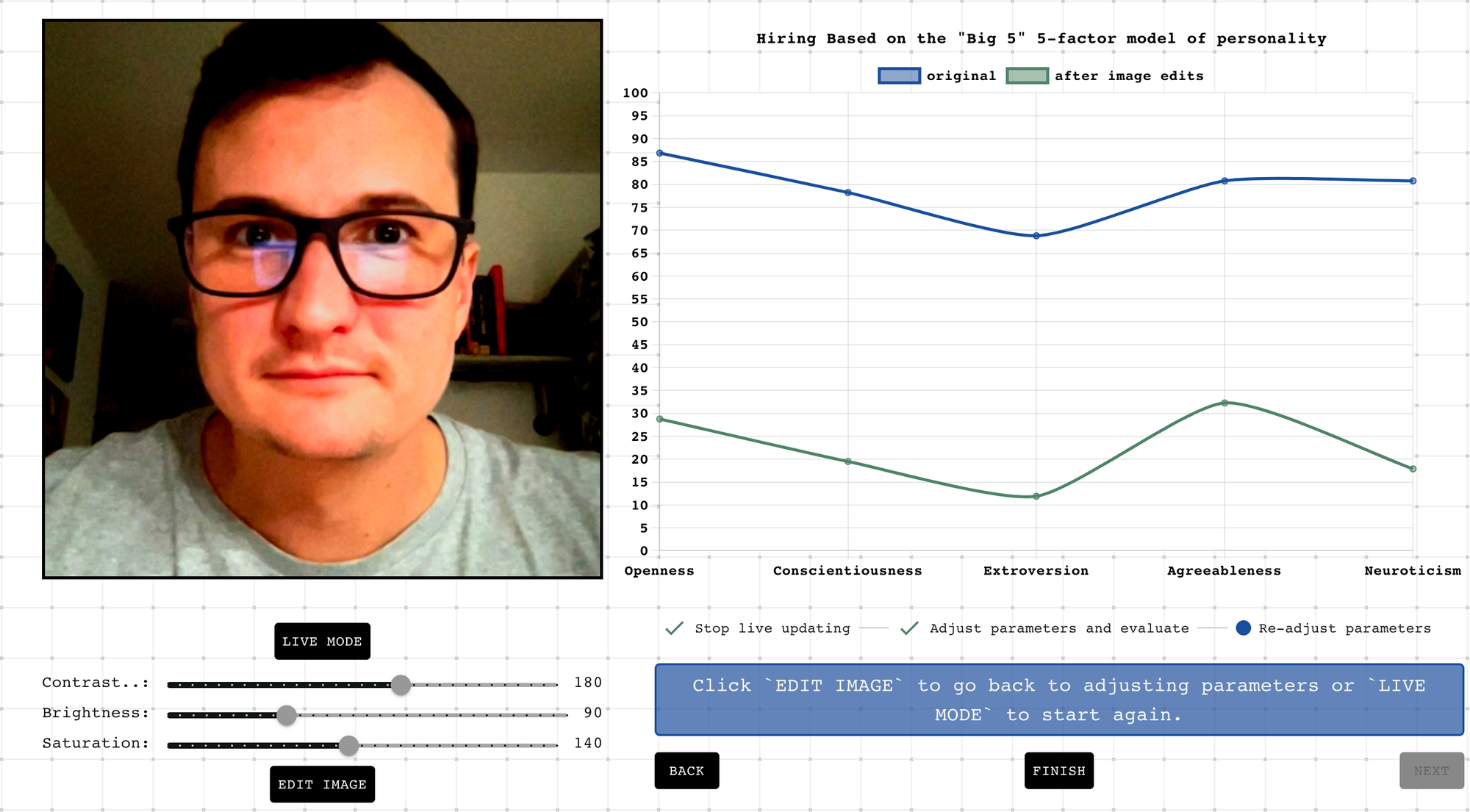

- In 2021, Bayerischer Rundfunk (BR) tested Retorio’s AI hiring software, which evaluates personality traits using the OCEAN model. The experiment showed results varied significantly when candidates changed appearance (e.g., wearing glasses or a headscarf) or even the background, raising doubts about the system’s reliability. Researchers confirmed that small changes in image brightness or contrast could alter personality scores. Critics argue such AI tools lack scientific grounding, risk amplifying racial and gender biases, and can unfairly impact workers’ livelihoods. As these opaque systems gain influence in hiring, concerns grow over accountability and the need for fairer approaches to AI in recruitment.

Instead of eliminating bias, these systems sometimes repackage it in ways that are harder to detect — and harder to challenge.

The Illusion of Objectivity

One of the most important lessons I learned is that AI doesn’t necessarily remove bias. In fact, it can make bias harder to spot. Humans can explain their reasoning (“I thought this candidate lacked confidence”), but algorithms often work in black boxes. We don’t know which variables tipped the scales, or even whether the system was trained on representative data.

That’s dangerous — because it gives companies a false sense of neutrality. The technology looks objective, but underneath it may simply be amplifying old inequalities.

So, What Can We Do?

The future of recruitment doesn’t have to be dystopian. There are meaningful steps we can take to make AI fairer and more transparent:

1. Independent Reviews

Efforts are underway to regulate AI in hiring through independent reviews and legal frameworks. HireVue, for example, partnered with ORCAA for external audits and now falls under New York City’s Local Law 1894-2020, which requires bias reviews of hiring software. In the U.S., the EEOC launched an initiative to ensure recruitment tools comply with civil rights laws. In Europe, experts like Katharina Zweig have called for strict regulations, including banning personality measurement tools that disadvantage applicants. While the GDPR protects personal data, further debate is needed to determine which AI hiring applications are fair, non-discriminatory, and socially acceptable.

2. Transparency and Candidate Rights

Companies using AI in recruitment must ensure radical transparency. Employers should clearly explain how AI tools are applied throughout the hiring process, document decisions in detail, and provide feedback to applicants—especially after negative outcomes. Candidates must be informed about what data is collected, how it is used, and which attributes influenced the decision. Transparency and communication are essential not only for fairness but also to maintain trust in AI-driven hiring.

3. Training HR Professionals

HR professionals need a clear understanding of the limits and risks of AI in recruitment. While time pressure makes these tools attractive, critical awareness is essential to avoid blindly trusting opaque systems. Greater scrutiny of AI vendors—demanding transparency about where and how algorithms are applied—helps HR evaluate both job boards and candidate assessment tools. Only with this awareness can the real benefits and risks of AI in hiring be properly weighed.

4. Shifting the Goal

Instead of making AI simply “fair,” why not use it to actively amplify marginalised voices? For example, rethinking hiring around digital credentials and lifelong learning records could open opportunities for underrepresented groups.

Rethinking the Future of Hiring

At the end of my research, I kept coming back to one question: Can we afford to let machines make life-changing decisions about people’s careers?

AI can help — no doubt. It can write more inclusive job ads, process thousands of resumes quickly, and even highlight overlooked talent. But it should never replace critical human judgment, especially when livelihoods are at stake.

If we want AI to truly advance diversity and equity, we need to:

- Challenge its assumptions,

- Hold companies accountable for how they deploy it, and

- Keep humans in the loop for decisions that affect people’s futures.

AI in recruitment is here to stay. The real question is: will we shape it into a tool for fairness, or will we let it reinforce the inequalities of the past?

That’s the conversation I want to spark with my research — and I hope this article has given you a glimpse into both the promises and pitfalls of AI in hiring.

What do you think? Would you feel comfortable being evaluated by an algorithm in your next job application?

You can read the complete research paper here.